About this mod

ValleyTalk brings infinite dialogue to Stardew Valley. Covering all villagers, the mod uses AI to create fully context aware dialogue for anywhere repetition is a problem, leaving classic lines in place for events and festivals.

- Requirements

- Permissions and credits

-

Translations

- Turkish

- Spanish

- Russian

- Mandarin

- French

- Changelogs

Overview

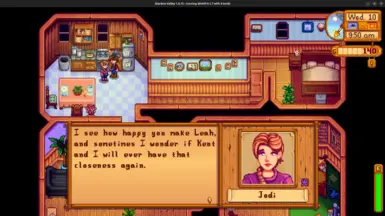

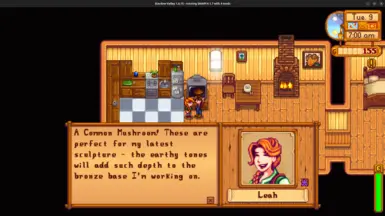

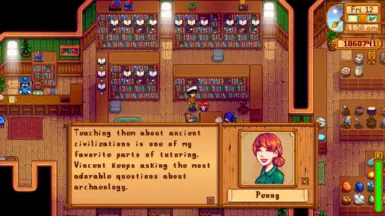

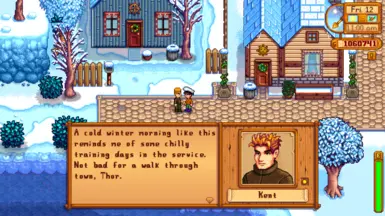

ValleyTalk uses the power of modern AI to make Stardew Valley conversations immersive in a way that was not possible previously. Talk to your spouse about options for home schooling your children, discuss your latest finds in the mines, have the villagers remember you are married when you give them a gift, let the villagers refer to each other when talking in a group – the options are endless. See the demo video for just a few conversations that happened playing three days in the game (still needs updating to show text input for v1.0+).

v1.0.0

This is a major change responding to a lot of regularly asked for features. The format of the mod dialogue and biographies have been changed so any existing biographies you have written will need to be updated. The config file format has also changed. Major changes in this version include:

- A complete rewrite of how the mod deals with the game summary and biographies, to create and load these using Content Patcher. This enables other mods to modify the information that ValleyTalk sees - so they can insert additional biographies, locations, relationships, etc or mod the prompts to change how LLMs respond to the user.

- ValleyTalk content patches can be stand-alone - like the Valley Talk for SVE content pack - or included in mods that introduce new characters, locations, etc. You are welcome to produce and distribute any content packs subject only to other people's copyright.

- You now have the ability to enter custom comments in discussions - both initiating a conversation with a custom question (just hold down the left Alt key on your keyboard while clicking on a character) or by selecting 'Something Else' from the response options. You can configure whether the 'Something Else' option is always shown, shown only when there are other response options or Never Shown.

- Added direct support for Chinese based LLM providers DeepSeek and VolcEngine. For me DeepSeek seems very slow, but performance may be better from elsewhere. I have not been able to test the VolcEngine adapter as I struggles to register with them without Chinese ID, so please let me know if this works.

- Fixed the bug saving conversation history with Mister Qi or any other characters with spaces in their name. Code should not allow history to be save for villagers with any characters in their name.

- The mod no longer hangs when generation is happening - showing a 'Thinking...' window instead.

- Many other small fixes and enhancements.

- Sorry - no Android support still. I tried, but the mod still hangs on load for me.

- Support for multiplayer games. Note: players other than the host will save their event history in a folder inside the ValleyTalk mod folder - which will need to be retained on upgrades.

- Spouses will give and talk about gifts, based on the gifts given in the original game.

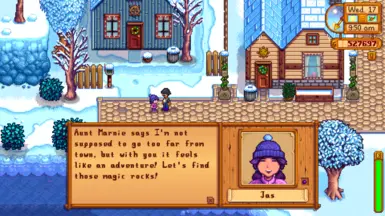

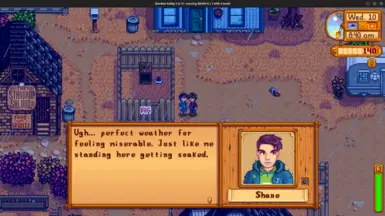

- Prompt changes for Shane and Jas early in the game, to make their responses more canon consistent.

- Improved recognition of when a child will come through pregnancy and when through adoption.

- Bug fixes: Llama.cpp support fixed, fault tolerance on invalid DisplayName fields, generation failed error message improvements, case sensitivity in schedules.

v0.13.0

- Full support for the Polyamory Sweet mod and Immersive Spouses. All villagers will acknowledge and accept all of your spouses.

- Fixes to error logging when running not in English.

- Cleanups to occasional missing punctuation.

- Additional knowledge for AI models - when you were married, multiple spouses, day schedules.

Features

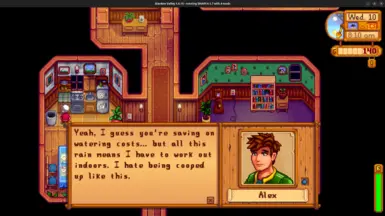

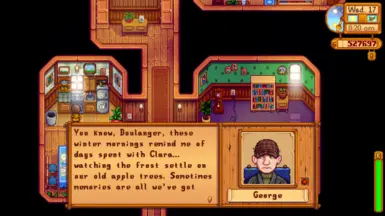

- When talking to any villager they will react based on friendship level and season as normal, as well as other factors including weather, time of day, where they are standing, who else is present, community events, bundle completion, how they feel about any gift they've been given and their history with the player.

- When talking to a spouse they will also be aware of buildings, animals and crops currently on the farm, pets, children, happiness in the marriage, how long they've been married, any other spouses (if using a polyamory mod) and more.

- NEW in v1.0.0 - You can respond to questions from villagers by typing your own response, or selecting from 3 or 4 options proposed by the LLM. NOTE: Most commercial LLM providers apply a level of censorship to their inputs and outputs. If you give responses that are NSFW or otherwise controversial on some providers the models may refuse to respond and your LLM account may be suspended and credit lost. You have been warned.

- Villagers and spouses remember past conversations and refer back to them in the future.

- All villagers respond in character.

- The farmer is able to have long back and forth conversations with the villagers, exploring aspects of their character and relationship.

- Can be run in conjunction with other dialogue mods and custom NPCs. New in v1.0.0 - NPC biographies can be added using Content Patcher content packs, so users can extend coverage to any of their own villagers and locations.

- Works with all major large-language model providers, including free options.

- Combines contextually aware conversation with standard (or modded) dialogue for events and festivals. NOTE: Many villagers have "event" dialogue for when they first speak to the farmer, so the first conversation with these villagers will use the standard lines.

- Support for languages other than English either using translation packs via i18n or translating on the fly using a technique proposed by kurotori1788c (thanks).

Limitations

This mod remains a work in progress, and there are still a bunch of additional features I plan to add including:

- Festival dialogue.

- Understanding of the rooms within buildings.

- Full biographies for SVE villagers - Fixed in v1.0.0 - see separate ValleyTalk for SVE mod content pack.

There are also some technical limitations at present:

- Responses when talking to villagers are necessarily slower than for the canon dialogue, and the screen freezes while a response is generated. Typically this takes a couple of seconds, but speed depends on which provider is being used.

- When playing with a controller there is a problem selecting dialogue as conversations get longer. This appears to be a bug in the base game, and has been reported. The desired response can always be selected with a mouse.

- The game will freeze while responses are generated - Fixed in v1.0.0.

Prerequisites

- Stardew Valley 1.6.14 (note that as this is a code patch, later versions may break)

- SMAPI 4.1.7

- (Optional) Generic Mod Config Menu 1.14.1 or later.

- Content Patcher 2.6.1 or later.

- The mod has been tested on Windows, Mac and Linux. It is known not to be compatible with Android. Compatibility with other platforms is unknown.

Relationship with Other Mods

ValleyTalk is the result of months of work tuning prompts for modern AI models to give good Stardew dialogue in the authentic voice of the characters and hooking this into the Stardew code in a way that allows it to reflect the full game situation. It was not built using content from any other mods, and when running does not use any content from other mods to generate outputs even if they are installed together.

ValleyTalk can be installed in conjunction with most other mods, but it operates independently and the dialogue generated won't reflect changes to characters or game scenarios from those mods.

Known Compatible Mods

- Stardew Valley Extended - full support with the ValleyTalk for SVE Content Pack.

- Polyamory Sweet - all villagers are aware of and talk about all of your spouses.

- Immersive Spouses - spouses and other villagers know their plans for the rest of the day, and can talk about them.

- Generic Mod Config Menu - gives a configuration UI for ValleyTalk.

- Most other operational mods that don't patch the dialogue code - Automate, SpaceCore, Farm Type Manager, Expanded Preconditions, etc are the ones I have tested.

ValleyTalk is known not to work with the following mods:

- Cooperative Egg Hunt

- Button's Extra Trigger Action Stuff

Installation

- Download the zip file. Ensure you have the prerequisites installed.

- Extract the contents of the zip file into a folder named “ValleyTalk” in your mods folder (from v1.0.0 there will be 2 sub folders, ValleyTalk that contains the DLL and config file and a base content pack).

- Select which LLM provider to use.

- If using a commercial providers, set up an account and get an API key.

- Update the config.json file with the necessary details (see below).

- Play as normal

You can add the mod with an existing Stardew save game, though the characters won’t remember any conversations that happened before the mod was installed.

To delete the mod simply delete the ValleyTalk folder.

To update the mod it is safest to delete the existing folder and recreate it. Before deleting the folder you will want to take a copy of the config.json file, and put it back into the new folder.

Configuring

You can configure the mod either directly in the config.json file or using the Generic Mod Configuration Menu mod.

AI Model Configuration

Generally it is best to set up the configuration directly in config.json if you can, using the config details below for your chosen provider.

The mod supports the GMCM, but some of the dynamic elements stretch what GMCM can support. Please note the following:

- The models supported by a particular provider are only updated when the ValleyTalk screen in GMCM is opened. Therefore, after selecting a provider and entering your API key for it you will need to hit “Save & Close” on the config screen and reopen it to get an updated list of model names.

- The model providers dropdown list has more than 5 items but only 5 are initially displayed. You can scroll to the rest of the list using the scroll wheel on a mouse or the second stick on a game controller.

- Only the inputs that are needed for the selected provider will be displayed. These also are only updated when the screen is opened.

A walk through of how to configure the mod for API access using Claude 3.5 Haiku via OpenRouter is here:

An additional video showing how to configure the free Mixtral 8x22b model is included in the mod videos.

How Often to Show

ValleyTalk has three configuration options that allow users to configure how often generated dialogue is shown. For each of these the frequency is specified on a scale from 0 (never show) to 4 (always show). The three fields are:

- MarriageFrequency - how often to use ValleyTalk when talking to a spouse.

- GiftFrequency - how often to use ValleyTalk when a gift is given.

- GeneralFrequency - how often to use ValleyTalk for any other dialogue.

Users can also specify a list of characters for which the mod should not be applied, as a list of character names divided by commas in the "DisableCharacters" field.

Manual Response Input

You can configure in the config system whether the game should give the option of a manually typed response Always (including when no computer generated options are provided), only when generated options are available or Never.

It is also possible to configure the key which is used to trigger the ability to enter your own comments to start a conversation - which defaults to the Left Alt key.

Running Costs

The mod is free, and if you operate with a locally hosted large language model or a free hosted model that can be free too. The mod is structured to make effective use of prompt caching when running locally using LlamaCpp or similar, but local hosting will still typically be slow.

If you are using one of the commercial LLM providers, then you just pay for what you use. Pricing is usually quoted as a cost per million “tokens” – broken down into inputs, cached inputs and outputs. In testing I found I got between 100 and 150 conversations per million tokens of input – with about half of that cached. The output tokens were minimal by comparison. Using Claude 3.5 Sonnet at $2.00 per million tokens of non-cached input that works out to about 1 US cent per conversation.

Model Providers

TL;DR (as at 31 May 2025):

- If you want to try the mod for free, I would recommend Google's Gemini 2.5 Flash model - which seems to be fast and smart enough to give a good experience.

- The best overall experience still seems to come from using Anthropic's Claude models - particularly 3.5 Sonnet and 3.7 Sonnet. My experience with Claude 4.0 hasn't been great for this use case so far.

- OpenAI's gpt-4o, Mistral's Mistral Large and Anthropic's Claude 3.5 Haiku also work well.

Note that I am not affiliated with any LLM provider, and my recommendations are based purely on performance and cost. These also change a lot, so sorry if my suggestions get stale.

The mod works with most major providers of large language models and also has support for self-hosted models via LlamaCpp and OpenAI compatibility. For any of the commercial large language model providers (OpenAI, Anthropic, Google, DeepSeek, VolcEngine and Mistral are directly supported) you will need to supply an API key in the configuration file for access.

Mistral / Mistral Large

Mistral Large is the strongest of the open weights models currently available to host yourself, though for better performance you will likely do better on La Plateforme.

Mistral Large has good world knowledge and is noticeably more liberal than the other hosted models in what topics it is willing to discuss.

Getting an API key

You can create an account at: https://auth.mistral.ai/ui/registration

You will need to add billing details to use Mistral Large.

Config Details

- Provider: Mistral

- ModelName: see below

- ApiKey: Required

- Debug: Optional

- EnableMod: true

- ServerAddress and PromptFormat are ignored.

Best Models

- mistral-large-latest (alternatively known as mistral-large-2411)

- open-mixtral-8x22b-2404 - Available on a free plan. Works well though characterisation of the villagers lacks a little.

Anthropic / Claude

Claude 3.5 & 3.7 Sonnet gives the best overall dialogue quality at the cost of being slower and more expensive than many other models, and somewhat prudish in what it is willing to discuss. Claude Sonnet seems to have lots of knowledge about Stardew in its built in knowledge, so is able to respond with more game details than most other models.

Claude 3.5 Haiku is still one of the best models, but significantly cheaper and faster than Sonnet. It is still somewhat prudish and has less intrinsic game knowledge than Claude Sonnet.

The focus of Claude 4.0 seems to have made it less useful for this mod.

Via OpenRouter.ai

The easiest way I've found to access the Anthropic models is via operrouter.ai - which also offers most of the other models listed here.

You can sign up at: https://openrouter.ai/

Config Details

- Provider: OpenAiCompatible

- ModelName: see below

- ApiKey: Required

- Debug: Optional

- EnableMod: true

- ServerAddress: https://openrouter.ai/api

- PromptFormat is ignored.

Getting an API key Directly

As at December 2024, Anthropic were seeking to restrict their direct APIs to business use, so you need to give business details when signing up. To use Anthropic's models directly, sign-up at: https://www.anthropic.com/api

Open AI / GPT-4o

OpenAI’s models work with ValleyTalk and come very close to matching the performance of Claude Sonnet. Best results seem to come from using the GPT-4 series models rather than the newer o1 & o3 model.

Getting an API key

You can create an account at https://openai.com/index/openai-api/ .

Config Details

- Provider: OpenAI

- ModelName: see below

- ApiKey: Required

- Debug: Optional

- EnableMod: true

- ServerAddress and PromptFormat are ignored.

Best models

- gpt-4o-2024-11-20

- gpt-4o-mini-2024-07-18 - cheaper to run. Probably also faster, though full gpt-4o is already very fast.

OpenAI have a lot of non-text models such as Dalle and Whisper. Those cannot be used with ValleyTalk.

Google / Gemini

Google Gemini Flash 2.5 is currently the strongest model for ValleyTalk which can be used for free - though it is currently in preview so subject to change. Once you sign up for a Google API key, Gemini 2.5 Flash can be used for free.

The outputs from Gemini 2.5 Flash are good, though I have witnessed occasional confusion about the Stardew world.

Getting an API key

You can create an API key at: https://aistudio.google.com/apikey - using a new or existing Google account.

You can access the free tier of Gemini flash without adding funds / billing details.

Config Details

- Provider: Google

- ModelName: see below

- ApiKey: Required

- Debug: Optional

- EnableMod: true

- ServerAddress and PromptFormat are ignored.

Best models

- gemini-2.5-flash-preview-05-20

OpenAI Compatible

Expand for details:

Best models

Valley Talk requires a high-quality text generation models to operate. Don't expect to get good results with a 7b or 32b parameter model. Beyond that many models can be used. I cannot guarantee that outputs will remain SFW if run with a model that has been tuned to give NSFW responses.

Config Details

- Provider: OpenAiCompatible

- ModelName: Depends on server

- ApiKey: Depends on server

- Debug: Optional

- EnableMod: true

- ServerAddress: Required (for example “http://localhost:8080/api/v1/”)

- PromptFormat is ignored.

LlamaCpp

Expand for Details:

This is the only adapter that requires a “Prompt Format” input containing details of the prompt to pass to the target model. In this format you can use \n to represent a new line, and you should include “{system}”, “{prompt}” and “{response_start}” in the format somewhere as these will be substituted with details generated by the mod.

Config Details

- Provider: LlamaCpp

- Debug: Optional

- EnableMod: true

- ServerAddress: Required (for example “http://localhost:8080/completion”)

- PromptFormat: Required

- ModelName and ApiKey are ignored.

Disclaimer

The mod seems to be working reliably for me now, but supports such a wide range of scenarios that it is hard to test thoroughly. Bugs will probably still be present, please raise them in the comments.

The mod relies on AI returning responses in a specific format to interpret them. On occasion this doesn’t happen, usually because the model being used is not clever enough or the model has been trained to refuse to engage in the topics being discussed. If this happens the mod will try four times to get a valid response and then give up. This may result in longer than usual delays and higher than usual costs.

The information provided to the models is generally family friendly, though does indicate physical relationships between happily married and seriously dating couples. Despite this, responses using uncensored and NSFW language models may be unpredictable.