About this mod

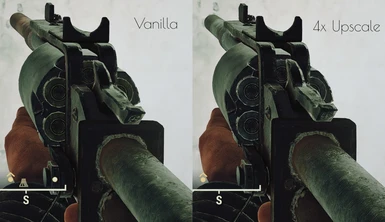

2x/4x AI Upscales of the vanilla textures for people with PER 15, INT 1, and unlimited VRAM

- Permissions and credits

Installation:

Download the mod via Vortex (or manually extract .7z archives to Fallout76\Data). Cloudy01's Fallout 76 Mod Manager is not well suited for managing this mod unless you are okay waiting a few hours for it to decompress, merge, and recompress this mod.

I recommend manually managing this mod by having a Fallout76Custom.ini created that contains the following in it:

[Archive]

sResourceArchive2List=4xActorsMonsters.ba2, 4xPowerArmor.ba2, 4xWeapons.ba2, 4xArmorClothing.ba2

If you use Cloudy's for managing a lot of other mod installs, it is best to enable what you need in Cloudy's, Save, then manually add the HD texture *.ba2's to the Fallout76Custom.ini afterwards.

2x Textures Performance Impact:

- FPS: Negligible impact w/ VRAM (8gb+), a fast CPU, and a fast SSD (preferably an m.2)

- Load Times: 4x longer texture loading times/'pop-in' compared to vanilla

- Gameplay Effects: Small, usually not noticeable increase in weapon load times on menus, combat doesn't appear to be affected as bad as 4x textures (see below)

4x Textures Performance Impact:

- FPS: Low impact w/ VRAM (12gb+), a fast CPU, and a fast SSD. High impact if you use too many 4x textures and exceed dedicated VRAM

- 16x longer texture loading times/'pop-in' (compared to vanilla) for models that use 4x textures + can hold up other load queue of other models/textures

- Gameplay Side Effects: If a weapon texture is not fully cached/loaded before you try to use it, you will be unable to shoot, use stimpaks, do actions, etc... in-game until the texture is loaded, but you CAN get killed while in this state. Heavy weapons in particular are heavily impacted by this, smaller weapons that typically have half the resolution are not as significantly affected.

Current Progress:

- All 4x/2x Packs uploaded (except landscapes/scenery, still need to debug alpha channel problems)

KNOWN ISSUES/BUGS:

- Wolves may be invisible and only seen via their shadow and still killable

- Eyelashes are white/bugged

- Some hair textures are very noisy/shimmery

TEXTURES NOT UPSCALED YET:

- Blue Ridge Powerarmor/Weapons Skins

- BOS Powerarmor/Weapons

- Hellcat Merc Powerarmor

- Crazed Supermutants

- Supermutant Behemoth

- Mercenary Outfit

- Blood Eagle Power Armor/Weapons Skins

- M.I.N.D. Powerarmors/Weapons

- Some new backpack skins (i.e. first responder)

- Vault Salesman Outfit

- Raider Powerfist

- Peppershaker Shotgun

- Stinger Broadsider

Log:

9/18/2021

Hard to isolate (seems to happen less when this mod is distabled) but it seems like this new patch doesn't like this mod now for some reason and quite frequently insta-crashes to desktop. I'm trying to isolate why/which pack(s) are causing it. If it's a format mismatch problem then my new release will fix it but.... it may be that they're doing some stuff with resource management and the extreme vram this mod utilizes breaks whatever they're doing.- Tested mod-less fo76 on multiple machines and the random crashes to desktop appear to be completely unrelated to this mod and more so just Bethesda's new patch being buggy.

- Nexusmods admins recommended splitting up 4x ba2 into multiple parts rather than having large single file uploads, so I need to see how the multiple archive option works in archive2 especially when like powerarmors have lots of identical copies of textures that normally get compressed down to 0

- Almost done rescaling the new patches

- Had to rewrite some of my nvddsinfo scripting as I was missing textures due to some parsing issues I couldn't fix in my win bat files. Had to rewrite so it's multithreaded as single runs of 65k textures took hours

- Nexusmods has quarantied my 4x Releases because they are split archives, meaning there isn't a way to release single BA2 versions of the 4x packs as they currently have a 20gb file size limit and the current 4x ba2's are from 40gb or larger. Going to contact Nexusmods support to see what's possible/what is the correct course of action. Only way around it right now is for me to release individual ba2's for the 4x variants where I've manually balanced the # of textures in each so that they all remain under 20gb. I've added the google drive mirror to the 2x file descriptions so people can manually download the 4x variants if they want it. This is a lot of work and I'd have to write a script to do the balancing. First gripe I've had w/ nexusmods :(

- Finishing up a better format segmentation script so that my upscaled dds exports match the original dds from bethesda. Aiming to re-release the 2x/4x packs tomorrow to include the missed textures from the last release, some bug fixes for shimmering/noisy hair, as well as the new textures from the Sept 8th 2021 Fallout Worlds patch.

8/24/2021

- More in-game testing shows that some people's hair is too shimmery/noisy for some reason, going to try adjusting the DXT type but I may need to exclude hair from upscaling in order to prevent rendering problems

- Eyelashes of people are all white now, that's also not right, unless it's a side effect of Rads.

8/23/2021

- Testing in game I found that the mutated wolf textures are rendering blank in game and you can only see the wolfs shadow. Looking at the dxt info it looks like this one used BC3 and doesn't like BC7. Need to finish up DXT formatting comparisons and issue a patch for the existing packs. Surprisingly this is the only real game breaking bug so far I've run into but even then adds a bit of a fun element where they are effectively phantom wolves where you can only locate/shoot them by listening to their paw sounds and by the shadows they cast and try and triangulate where their body is haha. Or use VATS.

8/22/2021

- Cleaning up all project files because I'm beginning to be tight on space. The existing files account for about 8TB across 5 drives. I've been needing to shuffle things around on USB 3.2 M.2 drives so that things could be parallel processed on different machines but have found lots of failures (corrupt pngs) and not fully compressed pngs (for speed of processing at the time I used compression of 1 in many cases) and now going back and doing 9/adding grayscale saving when appropriate to save some space.

- Writing script for resolution and format comparisons via nvddsinfo. Need to compare resolutions of the current patch vs the pre wastelanders patch and isolate texture size changes, then modify my existing PSNR comparison scripts to scale images of the old vs new patch to equivalent size and do PSNR comparisons to ensure I'm only picking up textures that were scaled only and not modified by bethesda. Also using this dds comparison stage to look at why some landscape alpha textures break during in game rendering. Likely some alpha textures use a different dxt format or something where it's like 1 bit alpha vs 8 bit alpha

- Acquired a copy of the last pre-wastelanders ba2's! Woohoo! Huge shoutout to the FO76 Datamining Discord and Nukacrypt !!!!

- Pngs are failing to fully upload, Google Drive kinda sucks at syncing. Pausing this until:

- Found a FO76 datamining discord and it sounds like they will help me get a copy of the pre-wastelanders texture ba2's where many textures were 2x the resolution of what they are now. Going to wait for this before releasing another version. Going to have to give a huge shoutout to the FO76 Datamining Discord and Nukacrypt if it all works out *fingers crossed*.

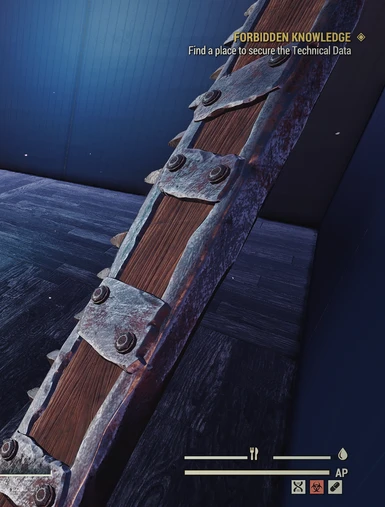

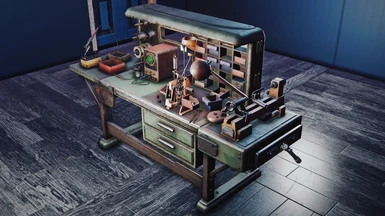

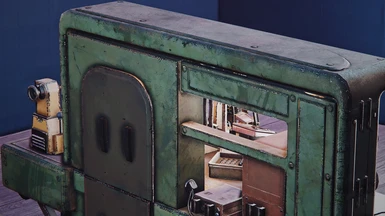

- I've done thorough in game testing of the 4x textures are I have yet to spot a bad texture or problem with rendering of actors/clothes/armor/weapons/power armor. The powerarmor pack is really the most noticeable. No longer are my arms looking like a 128x128 texture ?

- The existing 4x packs are actually very usable and have good framerates in game without cache thrashing as long as you have a 16gb VRAM or more card. This will not be the case w/ the landscape/scenery/prop pack. That pack alone will completely decimate what little VRAM you have left even in 2x form. Planning on tackling this pack this weekend and potentially looking at seeing if it's possible to cut _l/_r's out to reduce VRAM impact.

- Also thinking about releasing a 1x pack that just sharpens the textures & mipmaps without impacting VRAM

- Also planning on training a ESGRAN model to undo DXT compression similar to the models that convert JPEG to RAW so that later I can reprocess all the textures with data closer to the original art assets that only Bethesda artists have. Right now Gigapixel is working off of the compressed dds's so it ends up incorrectly trying to extract detail from compression artifacts.

- Adding raw 4x png's to google drive as they are fully finalized if any modders need the source data since it sucks to work off of a BC1/BC7 compressed base sometimes

- Google Drive is being a pain and not letting me upload the 2x packs but uploading to nexusmods is complete!

- On to debugging landscape alpha issues

8/15/2021:

- Beta Upload Link for google drive for anyone feeling adventurous while I sort out nexus mods uploads (NOTE THAT GOOGE MAY SUSPEND DOWNLOADS BECAUSE OF "QUOTA" REASONS... ugh): https://drive.google.com/drive/folders/1jnVzE-OgD2qj7xXVs7KtXWkFsnNVeeFg?usp=sharing

- Current ones available or uploading 4x Weapons, 4x Actors/Monsters, 4x Armor/outfits/clothing, 4x Powerarmor. I have to debug some issues going on with the landscape/scene pack, specifically problems with the alpha diffuse textures for trees and grass and such.

- Having lots of problems uploading to nexus, keeps crashing all my browsers for some reason, don't understand why.

- Mega.nz upload is MEGA slow for uploading for some weird reason and their cost per TB of transfer is really bad so I've abandoned them.

- Nexusmods has a 20gb per file limit so I'm having to pack the 4x packs into multi-part 7z files but will require users to rename the files in order to extract them due to file extension limitation on nexus mods. This mod is really pushing everything past all reasonable limits for any kind of mod lol.

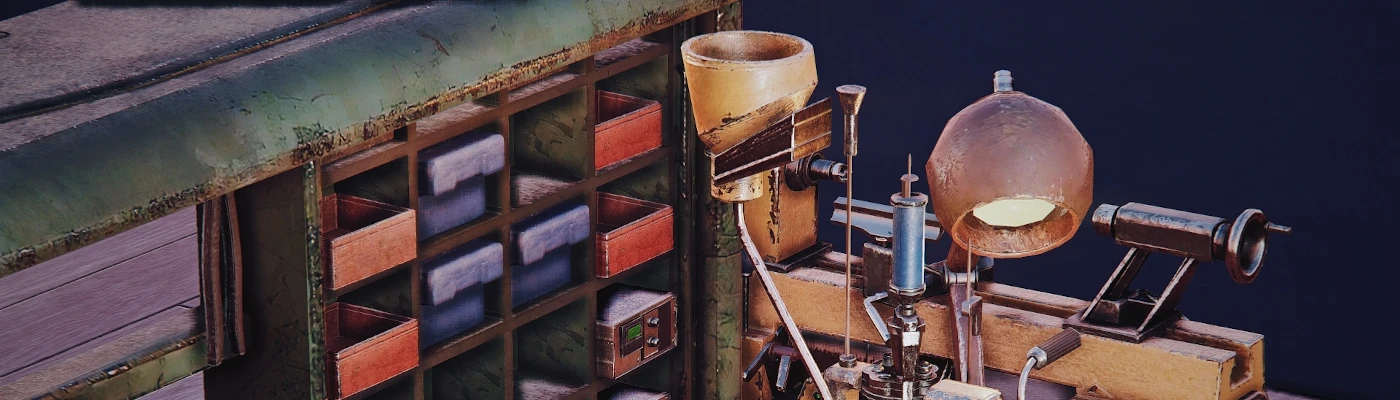

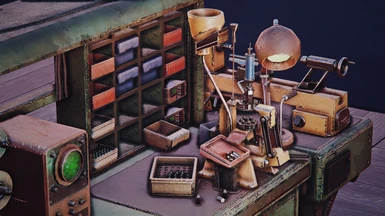

- Added a couple comparison pictures of excavator power armor in my new shelter photoshoot booth that has consistent lighting, FINALLY NO MORE STORMS AND WEATHER AND PESKY TIME AFFECTING MY SCREENSHOTS

- Retiring the processing log:

Processing Log:dds2png 53074 / 53074 Textures Done (7/5/2021)

Diffuse4x 16421 / 16421 Textures Done (7/12/2021)

Normals4x 12300 / 12300 Textures Done (7/16/2021)

Refl4x/Spec4x 11063 / 11063 Textures Done (7/17/2021)

L_red4x 13290 / 13290 Textures Done (7/21/2021)

L_green4x 13290 / 13290 Textures Done (7/26/2021)

L_blue4x 13290 / 13290 Textures Done (7/30/2021)

L_alpha4x 2779 / 2779 Textures Done (7/26/2021)

PSNR Reprocess 9219 / 9129 Textures Done (8/1/2021)

PSNR Reprocess 2 1596 / 1596 Textures Done (8/1/2021)

PSNR Reprocess 3 455 / 455 Textures Done (8/1/2021)

Diffuse4xRGB 4941 / 4941 TexturesDone (8/7/2021)

Diffuse4xAlpha 4941 / 4941 Textures Done (8/8/2021)

Normals4x Reprocess 12300 / 12300 Textures Done (8/8/2021)

-----------------------------------------------------------------------

Fully Completed DDS's

Diffuse2xRGBA 4927 / 4927 Textures Done (8/10/2021)

Diffuse4xRGBA 4927 / 4927 Textures Done (8/10/2021)

Diffuse2xRGB 11449 / 11449 Textures Done (8/12/2021)

Diffuse4xRGB 11499 / 11449 Textures Done (8/12/2021)

Lightmaps2x 13290 / 13290 Textures Done (8/11/2021)

Lightmaps4x 13290 / 13290 Textures Done (8/11/2021)

Normals2x 12300 / 12300 Textures Done (8/10/2021)

Normals4x 12300 / 12300 Textures Done (8/10/2021)

Refl2x/Spec2x 11063 / 11063 Textures Done (8/10/2021)

Refl4x/Spec4x 11063 / 11063 Textures Done (8/10/2021)

----------------------------------------------------------------------

Release/Testing

Manual Clean/Review 53074 / 53074 Textures Done (8/13/2021)

2x/4x Pack Segmentation Scripts Done (8/13/2021)

8/14/2021:

- Help me. I'm archiving x4 ba2 files and I don't think I have enough dedodated wam and need to download more:

- Had to figure out how to bypass BAKA as the archival was incredibly slow, finally got Archive2 working on its own and creating correct dds ba2's

- 4x looks good for the specific test objects I was looking at, broadening testing now to all scene items/furniture/camp/scenery textures

- I'll probably have to look at re-exporting Diffuse/Spec RGBs as a BC7 or BC3 instead of BC1 as it looks like there is too much blockyness via BC1

- Need to go do the home expansion/shelter quest to get the private shelter so I can make texture comparisons without local weather/day-night cycle affecting the lighting

- In game testing of x2 upscale didn't go well, textures are too blocky/aggressively sharp, need to do testing on better setting for downscaling x4 to x2 without introducing noising artifacts, or may need to export with my non-modified Intel DDS PS plugin, not sure yet:

Vanilla:Current 2x (ugh): New 4x (okay ish, some blockyness, need to debug):

New 4x (okay ish, some blockyness, need to debug): Original 4x:

Original 4x:

8/13/2021:

- Had multiple windows lockups/copy failures/corruptions via robocopy. Absolutely terrible program for same-drive ops or move ops, but fantastic for directory mgmt & simple copies. Some of my final png's were corrupted as a result of it and I'd have to re-gen. Going to do that after release, time crunch is nowwww.

- Finished manually validating everything at a high level

- Competed pack segmentation scripts

- Packs will be split out into Actors/Monsters, Weapons/Ammo, Clothing/Armor, Powerarmor, Environment/Scene/Furniture, Misc/Items, Decals/Blood/Gore, FX, and Interface Items. All will be available in 2x upscale & 4x upscale variants. Though some packs may be cut from the first pass of the new release if they don't work properly in-game.

- Staring 2x ba2 packing now, getting excited for in-game testing woo hoo

8/12/2021:

- Found 13 diffuse textures that fail to export from Photoshop as RGBA and instead export as RGB despite having alpha channels. Had to workaround via manual export/reprocess via psd

- Finally exporting diffuse RGB's dds's and then going to start packing test ba2's for testing tonight :D

- Done with final DDS exports of Lightmaps

- Found a handful of diffuse textures that somehow didn't get scaled or picked up, need to manually clean up and then finish up processing tomorrow, so close to finished woohoo

- The combined size of all 2x dds's will be around 175gb (not sure how much smaller when packed into ba2's)

- The combined size of all 4x dds's will be around 700gb (not sure how much smaller when packed into ba2's), lets hope I don't bring nexusmods down lol. Gdrive links will be available as backup dl sources haha

8/10/2021:

- Done with final DDS exports of Normals, Refl/Spec, and Diffuse RGBA's

- Beginning final work to export Diffuse RGB's & _l maps

8/9/2021:

- Learned the hard way that Photoshop's Automate doesn't notify you if there is a missing preset. Almost all of my DDS exports weren't exported in the correct format since the default on my parallel processing windows accounts was BC1 as they weren't used before and hidden preset files didn't exist on my other accounts. Literally every step of the way something goes wrong on this project and 99% of my time has been fixing or working around problems of incredibly buggy/problematic software >:[

- Have to go and re-export all DDS, sorry guys, more delays ugh

8/6/2021-8/8/2021:

- Running about a day behind. Ran into nonstop issues of course:

- XnConvert having a virtual memory leak (i.e. exploding committed memory but minimal actually utilized memory) but I really have to use this because nothing else can really utilized my Threadrippers 48 threads as efficiently, so I managed to up my pagefile size to 2TB and am able to process files in ~16kish batches without crashing

- Gigabyte makes terrible MOBO management software and apparently was causing sporadic crashes under gdrv2.sys making it sound like a graphics driver but it was instead a useless driver meant for easy OC'ing the TRX40 mobo I have that wasn't even used. Wasted like 4 hours in endless reboots and tweaks and googling until I found people complaining about it on their forums and how to fix it. Ugh, literally everything is going wrong w/ this project lol

- Manually reviewing normals and I really wasn't happy with the results it was spitting out under the v5.5.2 algo compared to what I was able to get w/ v 4.9 and there were so many instances where small details would get completely erased and were not artistically true to the original textures and would result in many smoothed out surfaces that looked nice but lacked details

Luckily I'm able to get away with GPU processing so it only too a few hours to upscale and reprocess everything, and luckily the older version of gigapixel doesn't have as many errors so normals are all complete woohoo! - Had to fix my PS automation for DDS conversion to handle folders instead of open files

- Non-stop issues when doing RGB/A splitting/validation with windows file management. Kept having crashes when overloading the write cache, disabling the write cache reduced crashes but didn't eliminate them. I had to refactor scripts just so it would still fully utilize the CPU while having enough of a delay/spacing between writes so that Winblows wouldn't take a dump.

- So close to being done preprocessing/verifying diffuse, should be able to get it done soon, hopefully EOD 8/9

- _r/_l pre-processing almost done as well, final stretch and only missing my initial target by 9 days so far lmfao

- Bonus: Lovely 2am Upscales I'm definitely not disturbed at all:

8/5/2021:

- Diffuse RGB / Diffuse Alpha Channels split and now being upscaled. The merge process combining the rgb and alpha channels is intensive though unfortunately. Hopefully will be complete by the 6th. But at least this process removes ringing/edge artifacts on these images i.e.:

8/3/2021-8/4/2021:

- Verified that Gigapixel mangles edges of the color values on alpha edges and REQUIRES properly splitting out the alpha channel of any texture before upscaling via gigapixel, upscaling the RGB separate from the A channel, then merging them back together before dumping back into photoshop to export back to DDS.

- I thought I had a working process for managing RGBA diffuse textures vs RGB textures but then found so many weird problems

- I initially exported to PSD then pulled data from that via imagemagick but found that it doesn't handle it well all the time when doing 1:1 comparisons vs the original DDS.

- Imagemagick also mangle color data on alpha edges when combining a RGB texture with an Alpha Texture via compositing and certain copy operations. Only reliable way to get around this is to individually split apart each channel into a separate file, then re-merge it along with the alpha.

- Found a fun bug where GIMP would actually mangle the color of the DXT textures by like a single RGB value that's basically un-noticable when just looking at it at a high level but when you actually compare values its slightly different, so I had to throw out gimp from my validation workflow.

- Photoshop mangles certain RGBA diffuse textures by adding a weird white border when exporting to PNG.

- Only workaround I found that would actually work and not mangle anything is exporting as a A8R8G8B8 BMP format out of Photoshop (which ironically fails to import that format properly hahaha), then split the BMP into RGB and A via imagemagick, then upscale the RGB and the A via gigapixel, then splitting the upscaled RGB into individual channels via imagemagick then merging each channel individually together with A to get to a final Image which then needs to be saved as a low compression (level 1) png so that colors from transparent pixels aren't removed, then import the PNG into Photoshop and then finally export as DDS.......... The amount of trial and error I had to go through on how to properly upscale the ~4.5k alpha diffuse textures in the game makes my head hurt. It's 2 am again, and I need sleep :(

- Hoping tomorrow night I'm fast enough to truly finalize all the diffuse textures and go back to manual validation. The last 4 steps on the processing queue aren't that bad time wise but still hoping I'll be able to start releasing packs here sunday night, but that assumes I don't encounter more problems and that in-game testing/validation goes well.

8/2/2021:

- As I was manually reviewing textures I noticed a bunch of diffuse textures where bethesda has an empty or nearly empty alpha channel that ends up removing all color data when exporting from Photoshop to PNG and leaving a solid black texture that ends up invalidating all PSNR comparisons/manual reviews since the original PNG isn't saved correctly. Ugh. I've manage to find a workaround of exporting from Photoshop to PSD then using imagemagick to split into RGB and Alpha. In order to be 100% sure that there aren't other oddities, I'm going to need to re-export all textures via photoshop to PSD instead of PNG then use imagemagick to filter the data.

- During manual review I found a number of textures where gigapixel doesn't really handle alpha channel data well and causes excessive edge highlighting that may end up breaking things like leaves, distant tree billboards, monster billboards, vines, etc. An RGB/A split refactor would probably fix this too by just using merging the original alpha channel upscaled via cubic or lanczos.

- Found a number of gradient textures that didn't upscale properly, nor do I think should they. Don't think it would add any fidelity or detail and may potentially break things if anything was pixel coded (i.e. 0-63px idx) rather than relative number (i.e. 0.0f-1.0f). Need to remove this from the master

- While 95% of textures are unaffected by these upscaling failures and alpha channel problems, the ones that are are probably gamebreaking/immersion breaking.

- I'm going to have to push back my release estimate to next weekend, it's 2am and I'm tired :\ Sorry everyone, the last 10% polishing phase takes the longest as it always does in any project lol

8/1/2021:

- Round 1 low PSNR texture reprocessing completed. It's totally bizarre how you can run the same texture through gigapixel multiple times and get a different result (on their root high quality models not available publicly). So I'm going through and iterative reprocessing without changing any settings and correct upscales are being spit out at roughly the same failure rate of 20%.

- Starting up round 2 of PSNR reprocessing, only about 1.6k failed and need reprocessing. May need round 3 or 4 too to knock everything out.

- Starting up round 3 of PSNR reprocessing, some textures need less sharpening as certain patterns seem to cause gigapixel to distort colors too much. Others need one-off custom settings and need to be manually reprocessed.

- Noticed when I was finishing all diffuse textures and getting ready for 2x downscale/dds export that there were some textures not picked up via PSNR checking due to not enough non-zero texture data in the texture and so it wasn't flagging it as a bad rescale. There were other png's where imagemagick didn't pick up that it wasn't a fully exported PNG due to a gigapixel failure of computer crash so I'm manually screening every 4x png to ensure it was both upscaled correctly and separately wasn't partially corrupted due to a failed write due to crashes

- Obviously going to need to push back release a few days ugh... nothing ever goes smoothly, such is life

- Fixed identical image PSNR validation so I don't reprocess the blank _l_blue channel textures. Just about halfway done reprocessing failed textures. Working on looking through directories and trying to identify/segment out all directories into packs to be released.

- Ugh bethesda's content classification is wacky..... /atx/armor/.... has power armor, outfit "armor", and then actual armor..... And some of the naming of outfits vs armors weird vs officially publish descriptions on fallout wiki's like fallout.fandom.com... I'm probably going to just pack actual armor armor's and outfits/clothing all into one pack because it's hard to differentiate. Then separately put powerarmors into their own pack because this one is absolutely massive in terms of gigabytes. But it means I have to comb through all these unintuitive directories and google each thing and see if anything comes up like: /atx/armor/plaguerider/ is it an armor? power armor? outfit? consistency?!?! whats that? never heard of it

7/30/2021:

- Validation almost done but I made a scripting error that handled identical textures comparisons incorrectly so there are a number of textures (particularly blue channel _l) that are identical when scaled cubic vs gigapixel which means that they're blank blue channel textures so a number of low PSNR false positives exist. I still need to look in more detail if the arbitrary PSNR cutoff I selected is adequate and actually classifies correctly. Roughly 15k textures have failed upscaling by Gigapixel so in-line with what I expected. Haven't run the invalid PNG pass yet though, may add to the number of errors but probably not more than another 1k textures as it's mostly due to crashes, not algo problems.

7/29/2021:

- PSNR validation not going well because of windows being windows. Whenever I put load up my m.2 NVME drives, if the file operation process queue exceeds some arbitrary number of buffered ops, all of windows freezes up and forces me to restart and no luck finding any solutions on the web. Luckily I've baked in resume functionality into my scripts (I'm looking at you Topaz Labs with your $100 per year buggy program with no resume on crash capabilities). Diffuse validation done but waiting on the other 3 to finish so I can redo faulty textures.

- Managed to fix my windows crashing problems. Was fixed after installing an updated chipset driver straight from AMD instead of using one provided by my mobos mfg. So I'm now able to nearly max out my cpu doing PSNR comparisons. Still have about 52k textures to validate out of 82k textures. 6.5k have failed out of 30k textures. At this run rate I'll have about 17k failures which would take about a day of extra reprocessing but that also assumes that will go okay but things with gigapixel never do.

- Almost done with all 4x upscales. Spinning up PSNR validation very soon. Unfortunately it look like up to 25% of textures may have failed, hard to say as it varies a lot from folder to folder.

7/26/2021:

- _L alpha and green done. _L blue almost done, eta 7/28. Getting ready for PSNR validation tomorrow. 7/31 completion date is very optimistic as it looks like a good chunk of textures are failing upscaling properly, looks to be around 5%. Will also have to validate the specular textures differently with a more sensitive psnr pass due to dark textures being darkened too much. Need to also write a script to account for Photoshop failures due to crashes so I can resume progression easily, but may have to just run in smaller batches to avert crashes like 5k textures an instance, so I'll need roughly 10-12 instances running simultaneously to convert to dds. 2x downscale and sharpen pass are done simultaneously in xnconvert and shouldn't take more than a few hours for all 82k textures/split channels. Packing via baka isn't too bad either. I'll have to start writing a script to segment the textures in to packs for testing in game. And need to write a script to extract all dxt header info from all headers via nvidia dds info so I can see if there are major deviations in formats that may break in game rendering.

- _L red channel upscaling so close to done. _L green channel upscaling has begun. Based on current upscale rate 7/31 release date seems feasible but might end up being narrowly achieved.

- Custom ESRGAN model chugging away ~600k out of 800k iterations to see if it make sense wait for a PSNR model with increased iters and re-running ESRGAN. A weird artifact I think I see is that it ends up learning DXT compression?

7/20/2021:

- _L red channel upscaling almost done.

- ESRGAN self trained on FO76 diffuse textures w/ 1/7th the training iterations to texture samples ratio gets decently close to ESRGAN as published but slightly blurrier since the network hasn't fully been trained yet. Going to continue running it and see how it progresses. But I only ran the PSNR pass also on 1/7th the ratio so there may be a limit to how good it will get. I'll extend the PSNR training simultaneously while I continue the ESRGAN training on the original PSNR model and see if it gets any better @ 2x more iterations, otherwise reset the ESRGAN after the PSNR runs 7m iterations (vs the 1m now). Overall this is fairly promising to be extended to the rest of the channels, but the sample size is causing the number of iterations required to be 7x or more vs the orignal ESRGAN paper.

7/18/2021:

- _L red channel almost half way done. PSNR validator and PNG validator scripts mostly done, will have to run the PSNR validator once my cpu isn't as loaded up as its very resource intensive and currently only runs at a directory level, need to extend it to sub-dirs.

- ESRGAN FO76 diffuse training almost done, eta 2 days, but may need to extend the number of iterations if it doesn't work well because of the sheer number of texture samples. Haven't run tests yet but the loss functions appear to be reporting good figures, we'll see what the first pass results look like vs Gigapixel.

7/15/2021:

- Normal upscale nearly finished. I'll probably start the 2x downscales and png2dds photoshop exports for diffuse and normals while the _r/_l maps are being scaled.

- Custom FO76 ESRGAN model PSNR pass is almost done, will see in a couple days time if it is any better than Gigapixel. If not guess I'll just pour more effort into my bigdata upscaler.

7/14/2021:

- Normal upscale slowly progressing once again, restarted my 3 machines because a gigapixel instance crashed for no reason once again. Probably will take another day and will still need to filter out failed pngs/upscales.

- Custom upscaler progress:

- Modeled out all single channel transforms for data agnostic feature extraction, but need to add hue agnostic transforms, and program into the structs

- Wrote a performant spatial hash for the image features that would allow for easy nearest feature interpolation (but probably would result in bad read performance until heuristics are performed, from which the db will be sorted and indexed for speed when 1TB+ of features are loaded.

- Normal upscale slowly progressing

- This dev log is officially a Gigapixel rant until this project is completed

- Gigapixel AI Model = Amazing

Gigapixel AI frontend/backend = POS Fun Gigapixel AI features: - One instance won't utilize more than 40-50% of your CPU- can't run two instances on a single account, have to set up a secondary local windows account.

- If you pause / resume on a large batch, It will also mangle the batch list so you can't observe which texture it's on

- Two instances on two accounts will max out your cpu BUT if you pause/resume your 2nd instance halfway through a batch of 4000 textures, it'll reduce it's cpu utilization by ~66% on that process out of spite that it's not the favorite application running on your main windows account.

- Thanks Gigapixel AI, guess I'll use those spare ghz on writing a better upscaler in the meantime.

- Wrote image handling infrastructure and basic feature extraction/reconstruction for a custom big-data upscaler. Need to make feature extraction hue, rotation, and mirroring agnostic (also maybe inversion agnostic), followed up with whipping up a performant database/memory architecture that incorporates feature heuristics. Then its just throwing data at it and then seeing what sticks. Haven't decided yet on 1.66x/2x/3x/4x scaling yet, probably going to stick with 1.66x or 2x for proof of concept as 4x may be too much data without throwing quantization/compression into the mix to minimize dataset creep/overoptimization.

- Diffuse upscale is done but still need to run PSNR validator and re-do messed up textures. Normal upscale in progress

- Planning on rolling my own big-data upscaler while waiting for upscaling to finish. I'm fed up with the inconsistent results coupled with slow training/validation/upscaling on top of finicky front ends, I'm hoping I can come up with something better than what ESRGAN/Gigapixel have to offer. Got a model in my head just need to do some tests to see if it's feasible. In a nutshell the way these AI upscalers work is effectively compressing down image features of large datasets into a network of filters with weights/values set up in a way so that it's generalizable when tested on images it wasn't trained on. Then upscaling is just applying these filters to an image and it makes up/hallucinates details that didn't exist before. Theoretically you could get better/faster results just using big data and heuristics on patches of pixels and their nearest neighbor relationships for different kinds of images (i.e. real world vs normals vs anime... etc) without losing detail by not compressing it down into stacked filters/weights. Both storing, training, and upscaling should be really fast rather than running a neural net on it. Worth giving it a shot

7/11/2021:

- Almost done upscaling diffuse textures with Gigapixel. Wrote an PSNR/RMSE checker/validator of upscales to ensure that the final output is visually similar to a bicubic upscale. Gigapixel fucked up a decent number of textures (looks like around ~1000 textures are boned and need to be tweaked or have a different model run on them to prevent them from getting the blue texture of death).

- Training an ESRGAN model that learns to upscale all FO76 diffuse textures from 1/4th versions of itself. This will take about 7-10 days to train and see if it produces results better than gigapixel. But likely will be a futile effort.

7/10/2021:

- Damnit Gigapixel, you need to learn how to stop crashing all the time. Have to wait on my job on my laptop to finish and then merge the upscaled images with my desktop, filter down to the unconverted textures then restart the upscaling process. Something I wouldn't have to do but Gigapixel pretends like it never crashes and never picks up/resumes where you left off so I have to use scripts to check if there was an upscale AND is a valid PNG because it sometimes crashes during PNG saves. I swear if I could break the zip password on your *.tz file holding your mythical tensorflow model I would (it's longer than 9 characters so don't even think about brute forcing it unless you've got 100k+ gpu's ready to go). Maybe someone with x64dbg skills could crack it so then we could just bypass their buggy front end and just use python to batch jobs locally/externally.

- Started getting back into ESRGAN since my GPU's are free as I'm locked into CPU mode on gigapixel for the quality, going to see if I can first train a dxt compression remover (similar to JPEG-to-RAW AI processing that removes JPEG compression) then followed up by specialized diffuse/normal/spec/lighting 4x models. I'm also very curious what happens on in-sample self-training, i.e. scale down the existing FO76 textures to 25% res, run the AI training, then apply it to the original 100% res textures.

7/9/2021:

- Damnit Topaz Gigapixel you're driving me crazy, just completely randomly crashing. Couple that with seemingly arbitrary failures of upscaling causing entirely mangled textures? The definition of insanity is if you do the same thing twice and expect a different result... well yes I must be insane because if I spin up an instance of the same program on a different account and run it on the same image on one run it'll spit out a good image!? Then again I'm using their crazy HQ models that weren't supposed to be utilized by the public. Honestly I'm so fed up with it

- To combat I'm just going to literally rescale via all methods and then use imagemagick RMSE or PSNR comparison to pick the texture with least deviation from the ground truth and if none of them pass a threshold they all go in the bin and I'll manually assess. You'd think for a program that's been out for like 2+ year's and charging $100 per year to use it it'd be more stable but nope. No command line access? poor model selection for advanced users? lack of crash logs? no batching resume after crash capabilities or duplicate skipping option so your users have to pick up the pieces and manually filter out what was actually processed and what wasn't? absolutely horrendous list management? no ability to process multiple images at the same time despite having hardware that can handle it?, forcing fp16 models on fp32/f64 capable gpus leaving users to hack json files? no post upscale comparison tooling for optimizing model selection? monitor turns off while processing = halts all progress? putting back a suffix on a filename I specifically removed last time I ran it for this same file? no super basic error detection for results like this or deviation cutoff options/logging/warnings.... are you serious?

I'd go back to esrgan or other superres opensource models if yours wasn't so darn good the 60% of everything actually works:

- Seems I'm only able to do about 150 upscales an hour which means it would take me ~21 days to run plus or minus. Going to look at potentially spinning up another instance or two to fully max out this machine and will look at spinning couple older rigs to marginally speed up the process

- Ugh, after reviewing the ~1200 upscales from last night on the new HQ CPU model, it appears to completely randomly mangle some images into some weird blue pattern, not sure if I'll be able to debug of compensate for this

- Figured out the random blue pattern mangling, appears there's some problems with the AI model when not including color bleed compensation on the highest quality 192x192 kernel. Additionally the hq 192x192 kernel appears to have extremely excessive sharpening of certain noise patterns (i.e. finding patterns when there are none). The 256x256 kernel, though less sharp, appears to be more stable (on high detail/noise) and w/ color bleed compensation achieves very close results. After unsharp masks followed by slight sharpening, it's virtually indistinguishable vs the same process as 192x192 but appears to be more stable while also running slightly faster.

- Spinning up my other 6core/12thread laptop to increase upscaling throughput slightly as this will take a while. Two gigapixel instances on my 3960x saturates it so not much headroom there. Really wish they had these HQ models able to be run on gpu's.

7/7/2021:

- After digging into the bowels of Topaz's products, running countless comparisons (like finding out that running the old models on the CPU instead of the GPU yields better results) and researching quality settings I found an obscure forum comment that resulted in a (hidden) magical configuration on Gigapixel v5.5.2 that effectively uses the "root" TensorFlow model before topaz goes and makes optimized models on top of it for performance/etc. The only problem is that it only appears to run on the CPU and while I've got a 3960x it's going to take more but the results should be superior. Gigapixel is a temperamental program though, seems that you have to trick it in order to allow it to background process files on local windows users by minimizing the program to task bar while it's processing an image, let it go through a few images, THEN switch users, otherwise it straight up halts everything. I'm guessing that windows probably disables the rendering gpu on the alt account once the focus is on another and gigapixel probably spins waiting for a rendering call. But if you minimize and let it run a bit it must have programming at a specific section of runtime that allows skipping of rendering of the UI and continues upscaling.

7/6/2021:

- The newest version of Gigapixel v5.5.2 vs v4.9.3.1 (original texture pack) appears to be really really bad at detail extraction on diffuse materials now and additionally is actually quite destructive to existing details in certain colors/luma and is significantly less sharp in a large number of cases. However it appears it's scaling of normals is better. It's unfortunate they don't natively allow legacy model selection. I'm going to have to install multiple instances on a flash drive and see which performs the best, luckily there only appears to have been 2 or 3 major model revisions. I'll have to run them on a sampling of _d/_n/_l/_r textures and see which ones give the best (subjectively/sharper/low mutation) results for each type on average. Oh boy, fun fun fun.

- After tinkering it looks like v5.4.5 is the best result (can select hq/non-hq models in preferences and has a lot of bugfixes). Subjectively selected "best" settings:

- _d / _l_red / _l_green = non-hq / non-cb / 0 denoise / 100 deblur

- _l_blue = hq / no-cb / 100 denoise / 0 deblur (exclude all low filesize images as some high black images get mangled)

- _l_alpha = lancoz via xnconvert or other image software

- _n / _r = hq / cb / 0 denoise / 100 deblur

- others like _a/_alpha/_b/_bw/_c/_e/_f/_g/_grad/_glow/_fx/_glowfx/_h/_lgrad/_m/_p/_s/scan unknown and need to be researched

- _*_back.dds follow whatever the * type is I think

- Potentially a Gigapixel sharpen pass, followed by an xnconvert unsharp mask + sharpen potentially to refine some blurred details

- The existing mod appears to still be functional for 95% of all textures though it appears Bethesda has made quite a lot of texture changes/updates. I'm re-doing my batch processing so that it's more scalable/less manual work. Managed to figure out how to parallelize Photoshop via running instances on multiple local windows accounts on the same machine and wrote some scripts to organize all textures into types so I can use the folder functionality for automation in PS to bypass the 400 open image limit. Wrangling gigapixel but there is no command line functionality and it really doesn't like dealing with more than 10k images at a time (they seem to have very inefficient list management!?). Successfully parallelized gigapixel across multiple local windows accounts so I am now scaling textures at nearly 3x the speed. Currently averaging roughly 3 4k textures per second, so scaling about 85k split textures would be about ~8 hours plus some manual swap time due to the gigapixel list management problem. Double that to 16hrs to include the gigapixel sharpening pass. _l channel splitting takes about an hour. PS _d/_r/_n to PNG conversion is about 3 per second on 42k textures so 4hrs but PS crashes every 6k or so textures so there's added cleanup time. Guessing imagemagik will take about 2-3hrs to merge _l rgba channels. Guessing my xnconvert sharpening pass and + 2x variant generation will be about 4hrs. Biggest bottleneck is dds saving via PS, guessing this alone will take 24hrs minimum of processing plus crash times. Add in organization and BAKA archival times, probably another 6-12 hrs of processing all FO76 textures. Probably another 6-12 for upload to Nexus. Total processing time from scratch of about 75-100hrs, granted most of it is AFK but often requires manual intervention. Need to look at some way to script ddsinfo to giving me a clean list of every texture and format so I can more easily debug why sometimes textures look wrong in game. TBD. Hoping to make a refreshed release of all textures and more on 7/17/2021 at this rate.

- Getting my life wrecked by the covid-19 pandemic & life problems

- The internet is like a series of tubes and a bit fat one flowing 1000mbps will be coming out a wall socket near me soon. Big/faster updates coming soon

- Progress has been slow due to life getting in the way. Validated again that the texture ba2's weren't updated w/ the new updates but I failed to notice that they are approaching updates differently than I expected. They're creating new ba2's called 00UpdateTextures and 01UpdateTextures, some of which have duplicate textures, I'm assuming that the 01 overrides the 00, managing this is going to be messy. Currently extracting these new textures and going to issue updates the the existing 2x packs. Also noticed I may not have been exporting Backpacks at all, will look into this and upscale any that I may have missed or will release as a separate pack

- Found ~500 corrupted files for the armor pack I was working on, not sure if it was a copy error or a write cache flush issue or what but now I have to validate all my existing files and potentially reprocesses ones I already had finished that were ready for dds export & packing. ughhhhhhhhhhhhhhhhhhhhh luckily this isn't so hard to test for w/ a threadripper and pciex4.0 m.2 drives. If I have to reprocess w/ gigapixel then that'll take a while :\

- Nope, just the armor pack is affected apparently, dds & png files experienced some sort of copy failures as far as I can tell, very sporadic locations, i'm just going to scrap this one and start from scratch

- Had a bunch of issues getting everything to work properly on my pc (csm support problems, PCIE bifurcations issues, smr hdd issues, having to fully re-install windows/etc) finally solved everything and getting back to working on this and release a few packs this weekend

- I'm now keeping historical ba2 texture archives and have validated that the new update bethesda release didn't change any textures so I don't have to re-release any packs (was worried that the new outfits would force me to re-release but everything appears to have shipped w/ the original wastelanders files

- I hate SMR device managed hdds, stupid seagate marketing not disclosing critical info, it would be fine if it didnt clog the windows write queue and basically halt the whole pc until the cache is flushed, holeymoley, things drop to like 8mbps when everything is backed up

- Finished rebuilding my pc (yay no more bsods and random crashes). Need to restore 8tb raid array to continue work

- Writing script to use imagemagick to determine the mean/max data of image channels to determine if the channels need to be put in a separate workflow to avoid GIgapixel scaling issues of low mean images

- Unfortunately, the issue is more pervasive than I thought. Gigapixel upscales of near black textures causes artifacts on the subsurface channels and occasionally the reflection map. Need to re-work all lighting textures and reflection again to ensure these artifacts don't arise on existing/future pack releases

- Finally found the problem. Gigapixel is introducing artifacts on certain 4x upscales of the subscattering channel of the lighting textures that have non-zero but very low subscattering. The result ends up being artificially bright single pixels that ended up being filtered out when NN downscaled in the 2x release, but cause visual artifacts in the 4x release. Need to manually check this channel for all 2x and 4x textures and see if I can tweak the upscale settings so that artifacts arent generated, probably will require upscaling this channel separately with different parameters. I'm going to guess this may be a problem with the _r textures as well (since it also has very low intensity data in many cases) and will need to do a manual comparison pass on all textures to see what I need to tweak

- Found a weird lighting issue with 4x reconscope that didn't translate into the 2x reconscope. Need to debug what's going on before releasing 4x. Ran into cases where when I was resaving certain PNGs w/ xnconvert and it changed light texture png's into indexed images that broke the dds export process.

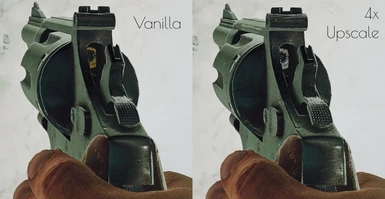

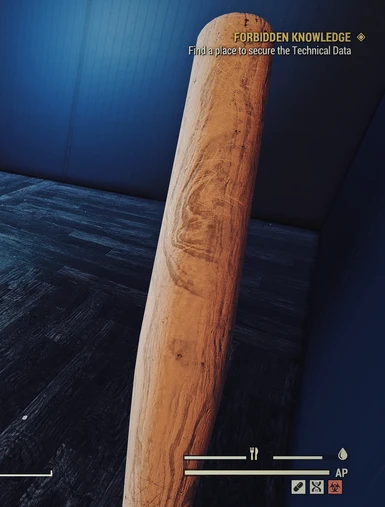

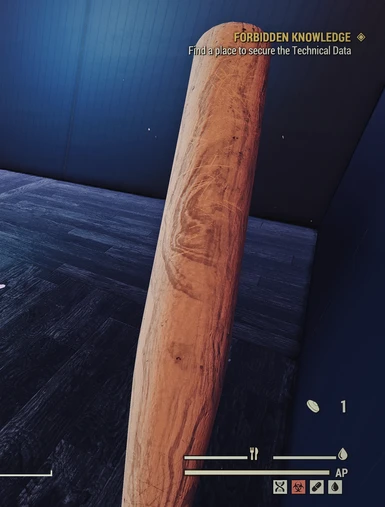

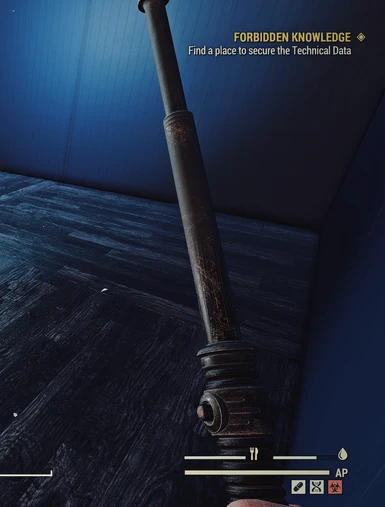

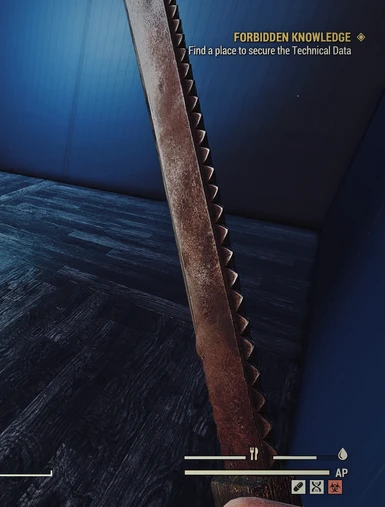

- 2x & 4x ranged weapons done and tested. 2x vs 1x ranged weapon screenshots but still need to take 4x vs 1x and add to nexus

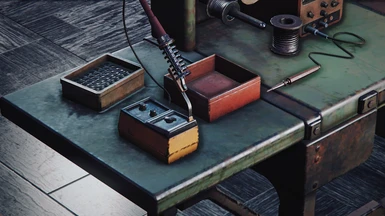

- Found missing crafting stations, reprocessing whole pack

- Tested the 'unchangable'/default box filter vs Point Filtering, Triangle Low Pass, and Bicubic, with Bicubic resulting in the best result with a significant increase in sharpness without adding noise/grainyness in mipmap transitions/distant maps. Will re-export all previous textures to increase overall quality since the majority of the time your're probably looking at mips 1 or higher and not the most detailed mipmap.

- Testing has revealed that some gold accents are lost for some reason on items like the black powder weapons, on the ammo of revolvers, on the wire of plasma rifles. Probably a format problem, need to debug what's going on before releasing further packs as it may affect clothing, powerarmor, etc down the line

- Bug: Gold Accent loss on items like black powder weapons, plasma rifles, ammo inside of revolvers. I've debugged the root of this issue: If the original lighting texture was saved as BC1 (i.e. RGB no alpha 4bpp) you MUST save it as BC1. I've found that BC7 RGB nukes all lighting while BC7 RGBA and BC3 RGBA distorts the gold accents. But saving as BC1 exclusively nukes lighting for models that have alpha (i.e. tinker workbench). So you have to have a script that after processing checks the original file to determine if it had alpha and make sure its marked under a separate save-dds workflow. I could skip the lighting textures altogether but from tests it results in less realistic baked ambient occlusion (ends up being overly smooth/linear conforming to the original low-res data while the upscaled version captures detailed AO cracks & crevices)

- Found the problem, was w/ lighting textures. Modified my scripts to detect 3 channel vs 4 channel light dds's and export accordingly.

- Determining whether any _r have alpha channels in them if not, bc1 rgb would use significantly less memory/hdd

- Looking into saving _r and _l as bc1 instead of bc7 to reduce total memory footprint of 4x textures by 25%. Could probably do the same w/ diffuse maps but I've seen the shitty banding/quantization artifacts it produces on large models, but may be good for weapons better perf loading them.

- Mipmaps auto-generated by intel's plugin use the box filter instead of better filters that improve texture sharpness when looking at them from a distance.

- In order to do that need to build a custom version of the already custom snorm supporting intel plugin so that it uses other built in filters like lanczos or bicubic for better/sharper mipmaps.

- Build documentation for the intel plugin is super sketchy, need to turn up msbuild verbosity in order to find out that build instructions failed to mention you need a binary of the Intel Implicit SPMD Program Compiler. v1.13 doesn't work, have to get the v1.12 binaries

- Found _f/_g/_m textures for certain clothes and guns. Need to determine proper dds format and modify scripts & conversion procedure to include

- Getting hit with random/sporadic error-less file save failures that end up not generating a dds/png when my computer is overburdened, having to do extra manual work to ensure everything is properly saved/converted

- Found non-standardized naming in certain textures (i'm lookin' at you armorraiderskilanes body_l.dds with that sneaky space instead of underscore) that weren't handled in my scripts properly, had to modify scripts to handle w/o skipping over

- Last night's nexusmods outage forced me to re-do some of my uploads :[

- The location I was going to use to upload these that had ~400mbps upload bandwidth unfortunately has NexusMods blocked and had blocked my vpn. So I'm stuck slowly uploading these @ 5mbps unfortunately. I have to give Nexusmods props for their web-based uploader which is resilient to upload failures/interruptions (when their entire website doesn't go down haha) without loosing all upload progress.

- My computer is basically 100% fully loaded 24/7 now upscaling, sharpening, and dds compressing, Photoshop is the biggest bottleneck and won't allow multiple instances of itself to run, followed by Gigapixel which doesn't have functionality for multi-gpu workloads. My ram utilization is like ~50gb out of 32gb with my enterprise intel pcie ssd working overtime simultaneously serving as a giant ram page-file while reading/writing hundreds of gigabytes of textures.

- The file structure of fallout combined with obscure weapons/objects/items I've never heard of make managing and packaging packs difficult to ensure everything is "complete" before releasing

- Finally put together a fast/mostly scripted good workflow to upscale all of the vanilla FO76 textures via Gigapixel AI/Sharpen AI. Lots of caveats, channel splitting, format problems, file management problems, and bad workflow conquered. May do 2k versions of files if people ask for it TBD

- Adding some screenshots of in-game rendering comparisons (note its under a personalized reshade so theres some added sharpening to both vanilla and HD versions)

How the textures in this mod are created:

- Use Archive2 to extract the textures your want out of the FO76 texture ba2 files

- Use imagemagick to convert the *_l.dds files into individuals png's split for each channel r/g/b/alpha (other programs remove colors from transparent pixels which doesn't work for this type of packed texture) but more importantly its faster/scriptable to do it via image magick than it is w/ photoshop and the only reason i'm using photoshop at all is due to the intel dds plugin. All other image editors/utilities I've tried cannot properly read/export all the FO76 textures. Command line example for one channel w/ low compression to prevent color stripping: magick convert image_rgba.png -define png:compression-level=1 -channel R -separate image_r.png

- Use photoshop w/ the intel texture plugin modded for FO76 (here on nexus mods) to open the remaining *_d.dds/*_n.dds/*_r.dds files and export them as png. (use automation/batching for large amounts of files, note photoshop has a 400 open file limit if you're trying to batch alot and wonder why files are missing)

- Use Gigapixel AI's 4x scaling (I find that 0 noise suppression and 100 blur removal works best for FO76 textures) and use their built in batch functionallity for mass upscaling. This step is done only for the _d/_l (red/green/blue/alpha split files)/_n/_r files, not the _l files as upscaling when each channel is techincally independent results in bad looking textures. _r files seem to not matter as much so the added overhead of splitting each channel, upscaling, then re-merging isn't needs as far as I can tell but is required for _l's. You can use 6x as well then manually scale down to 4x, or do 4x then 2x to get 8x as their custom-entry 8x isn't a true 8x and is blurrier than their 6x as it stands.

- (Optional) Use Sharpen AI's auto sharpen functionality on the upscaled files generated from #4. W/o this textures don't seem as sharp/crisp.

- Use imagemagick to merge the r/g/b/a channels from the upscaled _l files into one _l.png. Can be done in photoshop but imagemagick is easily scriptable and much faster when you want to do this for 1000+ textures all at once and can be launched independently to take advantage of cpu multithreading. Note, some _l files have no alpha channel and adding one can break in-game rendering. Command line example: magick convert image_r.png image_g.png image_b.png image_a.png -combine image_rgba.png

- Use photoshop to export the png's w/ the intel plugin. _d should be sRGB Color + Alpha BC7, _l should be linear Color + Alpha BC7, _n should be sRGB Normal Signed, _r should be sRGB Color + Alpha BC3 (I think this is ok, I had trouble w/ some files using BC7 but they worked w/ BC3 not sure why). (Technically the majority of _d/_l's don't have alpha and saving it w/ alpha reduces the fidelity of the r/g/b channels, however by overcompensating and using the BC7 format instead of the original BC3 format you actually achieve better fidelity)

- Export the files mimicking the same FO76 folder structure from extraction and archive via Baka (found here on nexus) and you have the files you see here in the mod

- Notes:

- You can use ESRGAN as well (which I actually was using before and got okay results) which is open source however I've found that the models that Gigapixel uses are superior and more resilient to the lossy dds compression artifacts that exist in the original files and the setup for ESRGAN is significantly more difficult than Gigapixel and you have to write your own batching system that splits up large images into padded sub-images then upscales those then re-stitches the sub-images back together (which gigapixel does automatically for you). And Gigapixel has a free trial as well!

- For upscaling thousands of files like I'm doing you have to script most of this in some way otherwise you'll be scaling/updating this by hand for hundreds of hours.